Nobel Prize History

The Nobel Prize is the most prestigious award in the scientific world. It was created according to Mr. Alfred Nobel’s will to give a prize “to those who, during the preceding year, have conferred the greatest benefit to humankind” in physics, chemistry, physiology or medicine, literature, and peace.

A sixth prize would be later on created for economic sciences by the Swedish central bank, officially called the Prize in Economic Sciences, often better known as the Nobel Prize in Economics.

The decision of who to attribute the prize to belongs to multiple Swedish academic institutions.

Legacy Concerns

The decision to create the Nobel Prize came to Alfred Nobel after he read his own obituary, following a mistake by a French newspaper that misunderstood the news of his brother’s death. Titled “The Merchant of Death Is Dead”, the French article hammered Nobel for his invention of smokeless explosives, of which dynamite was the most famous one.

His inventions were very influential in shaping modern warfare, and Nobel purchased a massive iron and steel mill to turn it into a major armaments manufacturer. As he was first a chemist, engineer, and inventor, Nobel realized that he did not want his legacy to be one of a man remembered to have made a fortune over war and the death of others.

Nobel Prize

These days, Nobel’s Fortune is stored in a fund invested to generate income to finance the Nobel Foundation and the gold-plated green gold medal, diploma, and monetary award of 11 million SEK (around $1M) attributed to the winners.

Source: Britannica

Often, the Nobel Prize money is divided between several winners, especially in scientific fields where it is common for 2 or 3 leading figures to contribute together or in parallel to a groundbreaking discovery.

Nobel Prize In Computing?

Usually, Nobel Prizes in Physics are attributed to discoveries about the fundamental nature of the Universe, like black holes (2020) or exoplanets (2019). It can also be won by important advances in material sciences, like quantum dots (2023), lasers (2018), or LEDs (2014).

But this year winners are a little more unconventional, with a focus on data analysis. More precisely, it was attributed to Geoffrey Hinton and John Hopfield for their contribution to creating artificial neural networks.

Source: Nobel Prize

This is a very up-to-date Nobel Prize, with neural networks the core technology responsible for most of the recent years’ advances in AI, including LLMs, but also machine vision (including for self-driving vehicles), and technical AIs used in new drug discoveries, material sciences, etc.

What Are Neural Networks?

Chips Versus Neurons

When computers were invented, the hope quickly grew that they could grow in capacity up to develop human-like intellectual capacities. But in practice, while computers became increasingly impressive at calculation, and then image generation, they stayed “dumb” when it came to actual reasoning.

In large part, this is because a computer chip is very different from the neurons that form the brain. While a silicon chip can perform very quickly millions of binary calculations (0 or 1), neurons are more of an analog signal, with plenty of complexity and “noise” in the signal.

So computers need precise programming to perform a calculation, they cannot really “learn” anything and simply follow the coded instructions to the letter.

Neural networks are different. They can tackle problems that are too vague and complicated to be managed by step-by-step instructions. One example is interpreting a picture to identify the objects in it, something computers are notoriously bad at, hence the “Are you a human” test on some web pages.

Source: Google

How Neurons Work

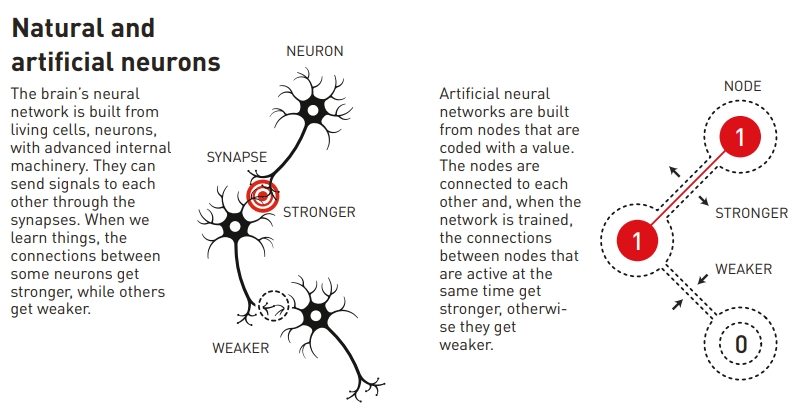

Neurons do not follow a strict pre-established programming for the most part. Instead, they function through a feedback loop of reinforcing connection patterns.

How it works is that neurons strengthen the connection to other neurons when they work together. This creates the idea to simulate in a computer how neurons work, with multiple interconnected nodes.

The connections between these nodes can be made stronger or weaker, forming a process called “training”, still today at the core of creating the latest AIs.

Source: Nobel Prize

The idea felt promising, but for a while, the computing power required looked prohibitively high, especially for the relatively primitive computers of the 1960s and 1970s.

How Human Memory Works

When we remember or recognize something, we are not performing a mathematically perfect calculation of the color of each pixel of an image. Instead, we iterate from partial data, try to draw an association to something already known, and identify a recognizable pattern.

Physicist John Hopfield invented in 1982 the Hopfield network, a method to store and recreate patterns.

Starting With Physics

He started by noticing that collective properties in many physical systems are robust to changes in model details. Notably magnetic materials derive their special characteristics thanks to their atomic spin – a property that makes each atom a tiny magnet.

The property of the larger material came from each atom’s spin modifying the ones around it, leading to all the spins ending in the same direction. His model describes the phenomenon using different nodes that influence each other.

Similarly, an image can be recorded as a grid of nodes, each with a 0 or 1 value (black or white).

If you compare a distorted image to this original grid, you can calculate how much each node of the distorted image differs. But by “pushing” the distorted node closer to where it makes sense, like the slope in a pre-established landscape, you can often recreate the original image despite the distortions.

So Hopfield had created a primitive, but functional for error correction or pattern completion, something computers normally are incapable of, as they are stuck with their strict programming.

Source: Nobel Prize

With enough computational power, each node can store any value, not just black & white. For example, it can be a number reflecting an exact color, the same way images are created digitally.

It can also reflect all kinds of data, not just images, creating a functional model for an artificial associative memory.

Using Association For Understanding

Even simple animals or small children can perform extraordinarily better than advanced computers in pattern recognition, like identifying which image contains a dog, a cat, or a squirrel. This is also done without any higher-order understanding of concepts like animals, mammals, or species.

And maybe more importantly, it can be achieved with very limited initial data and experience, instead of a catalog of billions of images of these animals covering every possible posture, color, shape, or form that can be displayed.

Analog Instead Of Binary

Because the nodes can contain any data, they can be used for continuous data, like phenomena over time.

It also allows for efficient calculation of interactions between complex systems, where the answer to a stimulus is more statistical than binary yes/no. This saves both energy consumption and computation power compared to traditional computing for this sort of problem.

And by adding more nodes, more complexity can be progressively added to the models.

The Boltzmann machine

Geoffrey Hinton, together with Terrence Sejnowski and other coworkers, worked on improving and extending the concept of the Hopfield model.

Hinton used ideas from statistical physics, a field describing things with many elements like gases or liquids. As the individual components are too numerous, the only way to model their behavior is to use statistical expectations of their behavior, instead of a “hard physics” calculation from each molecule’s temperature, location, speed, etc.

To add such capacity to the Hopfield model, he added an extra “hidden” layer of nodes between the visible one previously performing the computation alone. While invisible, the hidden nodes contribute to the energy levels of the other nodes and the system as a whole.

Source: Nobel Prize

They called this concept the Boltzmann machine, in honor of Ludwig Boltzmann, a 19th-century physicist who developed statistical mechanics, the foundation of statistical physics.

Source: Wikipedia

Restricted Boltzmann Machine

In its original design, the Boltzmann machine was too complex to be of much practical use. A simplified version was developed, where there are no connections between nodes at the same layer.

Source: Nobel Prize

This made the machine a lot more efficient, while still able to perform complex pattern recognition.

An additional improvement was the process of “pre-training” the neural network, with a series of Boltzmann machines in layers, one on top of the other.

This improved the starting point of the neural network, improving its pattern recognition abilities.

Impact On Modern Neural Network

Both Hopfield and Hinton have built the fundamental ideas behind neural networks, as well as the mathematics and tools to utilize them.

It would however take until the 2010s for computing power to have grown enough for neural networks to make it big in mainstream conscience.

Because the modern version of the Boltzmann machine uses so many interconnected layers, they are called “deep” neural networks. To give a perspective on the evolution of the field, Hopfield used a 30-node model in his 1982 publication, making it 435 possible connections. This led to around 500 parameters to keep track of.

At the time, computers could not handle a tentative 100-node model. Today’s LLMs (Large Language Models), the basis of tools like ChatGPT, use one trillion parameters and are constantly increasing their computing needs.

Applications

Fundamental Physics

Like many other Nobel Prizes in Physics, neural networks are a discovery that is boosting other new discoveries.

For example, they have been used to sift through and process the vast amounts of data necessary to discover the Higgs particle (Peter Higgs was awarded the Nobel Prize in Physics in 2013). They have also been used in other Nobel Prize-winning researchers, like measurements of the gravitational waves from colliding black holes or the search for exoplanets.

Applied Physics

Real-life materials and phenomena are often too complex to be modelized solely using a cause -> effect model at the individual particle level.

In comparison, neural network bases in statistical physics allow them to be a lot more flexible, and either directly give the right answer or “point” scientists in the right direction.

This is now routinely being deployed by top research institutes to, for example, calculate protein molecules’ structure and function, or work out which new versions of material may have the best properties for use in more efficient solar cells or batteries.

LLMs

Of course, no conversation about neural network applications would be complete without talking about LLMs, the core of the AI frenzy of the last few years since the release of ChatGPT in 2022.

In large part, this is because human-like language generation, “feeling” like a real person, was long considered the test on which we could consider an AI to be truly intelligent (the Turing Test).

LLMs’ real intelligence is hotly debated, from the opinion of AI enthusiasts (or doom-mongers) expecting true intelligence and technological singularity any time soon to seeing it as a mere trick unable to deliver useful results.

Nevertheless, they have a wide range of applications, especially where they can perform cheap tasks that are now reserved for much more expensive humans. This is especially true for mostly verbal tasks, like:

- Chatbots and customer service.

- Code development and verification.

- Translation and localization.

- Market research.

- Search and answer questions.

- Analysis of large documents and datasets.

- Education

Adjacent to LLMs, image generation might also create a whole new flood of images in our digital age, for both useful and potentially nefarious purposes (disinformation, blackmail, etc.).

Machine & Computer Vision

As the original application of neural networks back to Hopfield, it makes sense that image recognition is now a core potential application of neural networks.

Sometimes, machine vision is used to describe limited identification in a more controlled industrial setting, while computer vision aims for a more human-like vision.

Source: Solomon

Self Driving

A major sub-segment of this field is self-driving vehicles, as ultimately, human drivers are using their visual pattern recognition to steer safely their multi-ton vehicles.

For now, most self-driving systems rely on a mix of cameras and other sensors to stay safe (like radar and LIDAR), with the exception of Tesla focusing exclusively on human-like machine vision (thanks to a much larger dataset, provided by the fleet of Tesla cars).

Enough training data, manual annotation of millions of complex real-life situations, and also custom hardware reducing consumption and speeding up reaction times, will likely be the winning combination for the first company to win the trillion-dollar prize for developing the first true level 5 full driving automation.

Medical

Many diagnostics in medicine do not depend on lab tests but on the manual interpretation by a trained specialist of images produced by X-ray machines, MRI, scanners, etc.

This can take an expert years to become truly proficient at this task, which is as much art as it is science. Instead, AI can cheaply and consistently analyze millions of medical images with predictable results.

It could even be used to diagnose conditions previously not believed to be possible to detect with scans, like ADHD.

And maybe less obvious, AI using neural networks can also assist surgeons, like French Pixee Medical, allowing 3D tracking with a smartphone or smart glasses for orthopedic surgery.

AI can also automate the crucial procedure of recording the surgery, especially for repetitive and error-prone tasks. As surgeons forget instruments inside the patient in about 1500 surgeries per year in the US, computer vision could equally help eliminate this issue completely by automatically keeping track of everything used during the surgery.

Bioresearch

From drug discovery to predicting protein folding, neural networks are powerful tools for researchers in medicine and biology.

Already, this is revolutionizing how new therapies are being developed, as we discussed in “Top 5 AI & Digital Biotech Companies”. It could also contribute to more consistent data, which is often a problem in biological sciences, where many data are still created with manual input, like counting blood cells.

This can be solved with AI machine vision, with products like Shonit from Sigtuple now able to provide reliable and standardized counts of all types of blood cells.

Source: Sigtuple

Industry & Production

From quality control & component inspections, to fully automated assembly, machine/computer vision can make the production line more flexible and reactive to any shift in the production process.

This can both reduce costs by reducing the need for human input, as well as improve quality and production speed.

Robotics

As robotics exited the factory floor to interact with more complex everyday life environments, they need to step up quickly their pattern recognition capabilities.

From robodogs to delivery robots, and maybe soon humanoid personal assistants, robots are increasingly merging with AI using neural networks to help us in daily tasks.

Farming

Farming robots are increasingly using machine vision to identify weeds or ripe fruits and replace tedious manual labor or destructive large-scale machine tools.

This is maybe not going to impact only farming and our food systems but also ecological restoration, with strong effects on soil health, the emergence of new farming practices, and maybe better tools for reforestation and management of invasive species.

Military

Despite this raising complex ethical questions, it is a rule of history that any new technology is also investigated for its potential to give the military an edge against its adversary.

And as you will always have one nation doing it, the others have to keep up with it as well.

AI is already used to identify targets and analyze data on the battlefield, including in ongoing real-life wars like Ukraine and Gaza, something we discussed in “Top 10 Drones And Drone Warfare Stocks”.

Among the most alarming recent developments, we can mention the development of the latest version of the Russian Lancet, a suicide drone/missile using AI machine vision to identify and target enemy vehicles in the last segment of its course.

Giving the kill decision to an automated system is an obviously very dangerous step, that truly seems like the material of a science fiction movie just before an AI rebellion against mankind.

So we can only hope that military forces globally will come to an understanding of what technology can be deployed or not, similarly to how nuclear weapons are managed.

Neural Network Risks

It is worth mentioning that Geoffrey Hinton who won this year’s Nobel Prize is also very concerned with progress being made in AI technology.

After the Turing Prize in 2018 and the Nobel Prize in 2024, it is likely that his warning will be even more heard than before.

“I have suddenly switched my views on whether these things are going to be more intelligent than us.

I think they’re very close to it now and they will be much more intelligent than us in the future. How do we survive that?”

Geoffrey Hinton

Will our AI enthusiasm doom us to a future like in the Matrix or Terminator movies? Maybe not, but as ultra-complex neural networks are essentially a black box, its own creators do not fully understand that ethical and safety rules are probably needed before a malfunctioning AI causes irreparable damage.

Investing in Neural Networks

Neural networks are now becoming almost synonymous with AI technology at large, as there is a growing merging of disciplines in the sector.

As neural networks require large datasets for pre-training and training, they tend to be currently the domain of very large tech companies or very well-funded startups.

You can invest in AI-related companies through many brokers, and you can find here, on securities.io, our recommendations for the best brokers in the USA, Canada, Australia, and the UK, as well as many other countries.

If you are not interested in picking specific AI-related companies, you can also look into ETFs like the ARK Artificial Intelligence & Robotics UCITS ETF (ARKI), the Global X Robotics & Artificial Intelligence ETF (BOTZ), or the WisdomTree Artificial Intelligence and Innovation Fund (WTAI) which will provide a more diversified exposure to capitalize on AI.

Neural Network Companies

1. Microsoft

Microsoft has been at the center of the tech industry almost since its inception with its still-dominant operating system Windows.

Microsoft Corporation (MSFT -0.24%)

Microsoft Corporation (MSFT -0.24%)

It is now also a leader in enterprise software (Office365, Teams, LinkedIn, Skype, GitHub), gaming (Xbox and multiple videogame studios acquisitions), and in cloud (Azure).

More recently, it made good progress on AI. This includes some consumer-grade AI like the Bing Image Creator and more business-focused initiatives, like Copilot for Microsoft 365 and Microsoft Research. Copilot is now being deployed to retail and smaller companies as well.

Source: Constellation Research

Microsoft has acquired a reputation for being the enterprise-centered tech giant, compared to more consumer-focused companies like for example Apple or Facebook. With AI becoming increasingly important in business models, the preexisting presence of Microsoft in cloud and enterprise services should give it a head start in deploying AI at scale and in customer acquisitions.

The collaboration/quasi-ownership with AI development leaders like OpenAI (Of ChatGPT fame) will also cement Microsoft’s position as an AI powerhouse.

2. NVIDIA

NVidia initially had a dominant position in the graphics card (GPU) market, mostly used for high-end gaming and 3D modelization. GPUs are able to run calculations in parallel and differ in that regard from processors (CPU).

NVIDIA Corporation (NVDA -0%)

The design of its hardware proved a very good fit for cryptocurrency mining (especially Bitcoin), creating a strong wave of growth for the company.

Now, it appears that it is equally powerful for training AIs, making Nvidia’s hardware the backbone of the AI revolution.

NVidia is now developing custom computing systems for different AI applications, from self-driving cars to speech and conversational AIs, generative AIs, or cybersecurity.

It is likely that Nvidia has not found new use cases for its AI hardware, as shown by Microsoft’s research with PNNL. For example, NVidia is now developing a whole range of solutions for drug discoveries, as well as AI-powered medical devices and AI-assisted medical imaging.

Source: NVidia

It is likely that in the very long run, competitors to NVidia might start to seriously challenge the company’s initial head start.

But for the foreseeable future, considering the explosion in demand for AI-dedicated computing power, NVidia will stay the prime supplier of all the new AI-training data centers being built.

And if AI is a new tech gold rush, the investment wisdom is to prefer the sellers of pick and shovel rather than betting on finding gold.

In the AI rush, the tools of choice are for now NVidia AI chips.