Engineers may have just figured out how to give androids human-like facial expressions. The recently published waveform study introduces the concept of utilizing waveform movements to allow robots to relay expression naturally based on their state and conditions. This work could one day make human and android interactions easier and emotionally beneficial. Here’s what you need to know.

In the 1990s sci-fi thriller, Total Recall, there’s an iconic scene when Douglas Quaid (Arnold Schwarzenegger) jumps into an autonomous taxi. Upon entering the vehicle, an android that’s little more than the top half of a humanoid robot greets him and asks his destination. The robot, stuck in a single expression, blinks unnaturally before accepting the destination and driving off. For most people, this is how androids look – clunky and unnatural.

Source – Carolco Pictures

Despite being able to make lifelike-looking robots, something always seems off when the machines begin to move and interact. For example, they may smile oddly or pause in weird facial expressions. Also, they lack micro-expressions and can portray unnatural facial expressions when converting between emotional states. All of these factors have limited the ability of androids to interact autonomously and naturally with humans.

Traditional Method For Android Expression

The traditional method of providing androids with facial expressions is called the patchwork method. It involves loading the android with predetermined emotional states, which must be programmed in detail.

Over the years, this approach has gotten more complex with massive patchwork models storing hundreds of emotional states. The complex movements of each state can mean that androids are limited in their emotional responses due to memory restraints.

Additionally, there is no reliable way to transfer between these real emotional states, leading to the entire communication looking fake and pre-set up. Thankfully, these issues may have been resolved thanks to a group of innovative engineers seeking to drive Android adoption.

Waveform Movement Study

Engineers recently unveiled a more accurate and responsive way to produce realistic facial expressions in real-time. The study, “Automatic generation of dynamic arousal expression based on decaying wave synthesis for robot faces,” was published in the Journal of Robotics and Mechatronics. It explores the concept of facial expression synthesis technology and how it is a crucial step in driving human-robot communications forward.

The study introduces the concept of waveform functions as a means to relay facial expression data. The waveforms can oscillate at different intensities to signal different states of excitement more accurately than utilizing patchwork designs. Also, the waveform method eliminates the need to have hard shifts between facial expressions. Instead, a smooth transaction is possible.

Source – Osaka University

Notably, the system integrates deep structures that dynamically generate facial movements based on the machine’s current state and scenario. The use of decaying wave functions for synthesizing command sequences provides significant control compared to previous methods.

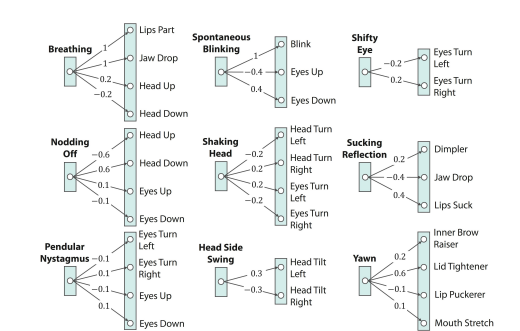

Waveform Movements

Waveform movements send shifting actuator states to different positions along the spectrum. To simplify their understanding, you can think of waveform movements as things your face would normally do, like blinking, breathing, smiling, yawning, squinting, and so on.

These facial expressions are combined to create complex facial movements that look genuine based on human data. The waveform movements can be overlaid to provide even smoother transitions and more realistic responses. Additionally, the intensity of these actions can be controlled by altering the waveform.

The engineers create a proprietary expression generation system, enabling them to alter the dynamic properties of each movement, including speed, amplitude, oscillation, and duration. This strategy provided the engineers with near-endless expression capabilities.

Testing Waveform Movements Study

To test the waveform movement theory, the team integrated a child-type android robot dubbed Affetto. Affetto was designed from the ground up with specific features enabling the facial movements of 21 actuators. The actuators sit behind the androids’ lifelike skin and each can be positioned individually utilizing waveform movements.

Source – Osaka University

Engineers then applied their computer model that categorized the movements in rates based on their level of excitement. The team adjusted these levels while human onlookers determined the emotional state of the robot and how natural the emotions came across. The team then combined natural combinations of emotions and had the transitions monitored by human observers. The tests were conducted several times with some exciting results.

Results of the Waveform Movements Study

The results of the waveform study demonstrate how tuned waveform facial movements can help androids express emotion and communicate naturally with humans. The superimposed decaying waves provide the added benefit of allowing the facial expressions to return to a resting state naturally.

Noticeable Movement Transitions Eliminated

The study showcased how decaying waveforms allow for fine-tuning and natural recovery from expression. The problems that plagued earlier attempts to use patchworked emotional expressions like unnatural movements during transitions are eliminated due to the smooth and overlapping nature of the waveform movements strategy.

Benefits of the Waveform Movements Study

Waveform movement technology brings a lot of benefits to the robotics sector. For one, the increased use of LLMs means that machines and humans can communicate using simple text and voice prompts. As such, it’s logical that androids with these capabilities should also dynamically express their mood states, enabling a new era of relatable communication with machines.

Reduces Developer Time

Another major benefit of waveform movement-based facial expression technology is that it doesn’t require robotic engineers to waste time programming every facial expression; this module will one day be integrated into devices as easily as adding an LLM. Together, these options will allow developers to create machines that can talk and express complex emotions like humans.

Future Use Case Scenarios for Waveform Movements Technology

Waveform movement technology will find a home across the robotics sector and beyond. Androids that can express emotion could be used in multiple industries ranging from healthcare to entertainment, and more. These devices will allow humans to understand their robots’ responses just like when speaking to a friend.

BusinessApplications

You can expect to speak to more androids in the future when attempting to work with large corporations. These devices will replace the front desk, secretaries, and other crucial positions in the near future. They will provide enhanced features and capabilities, including being able to communicate nonverbally with coworkers.

Elderly Care

Androids are seen by many as one of the best ways to help fight elderly solitude. There are millions of elderly people around the world who experience no human interactions for days at a time. Sadly, this scenario leads to rapid mental decline and other issues and diseases. A humanoid android with realistic human emotions could help those who lack human companionship feel more connected, empowering them to stave off brain diseases like Alzheimer’s.

Researchers

The waveform movement study was headed by Hisashi Ishihara out of Osaka University. Ishihara and his team of engineers now seek to expand their concept and make their models open for further collaboration. The goal is to create a universal waveform movement standard that can be used to program realistic expressions into tomorrow’s androids.

Companies That Could Benefit from the Waveform Movements Study

There are multiple robot firms that could gain from integrating this technology into their offerings. Providing robots with the ability to communicate more effectively would help many of these companies make their products more relatable. As such, there’s much to gain for those who can integrate waveform movement facial tech first.

Tesla

Tesla (TSLA +4.1%) is a well-known EV and tech manufacturer based out of the US. Elon Musk, one of the richest men in the world, is its outspoken CEO. He continues to push the boundaries of sustainability, EVs, space travel, and robotics to new heights.

In December 2021, Tesla introduced the concept of its Optimus Tesla Bot. This humanoid robot will one day handle multiple tasks in factories around the world. Notably, the device was showcased in October 2024, conducting several tasks around the plant. Impressively, Tesla’s CEO plans to have the robot enter service in 2025.

Tesla could take its Optimus robotics platform to the next level by integrating a waveform movement-powered humanlike face. This maneuver would allow the robot to interact seamlessly with its human coworkers. It could express emotions to workers regardless of whether they can hear or even understand the language the robot is programmed to speak.

If Tesla can integrate waveform technology into its offerings, it could represent the first time a commercially available humanoid robot hits the market with such capabilities and performance. The result could see Tesla stock gain value and stronger positioning in the market.

Waveform Movements – Bring Man and Machine Closer

The idea to use waveform movements rather than pre-recorded facial sequences makes a lot of sense. For one, emotions similarly run through your face with different intensities for each section based on the current scenario. As this technology improves, it may take humans to a day when the Turing test is easily passed.

Learn about other cool robotics news here.